A/B split testing, when accompanied by a sound process and methodology, is an effective way of optimizing conversion rates. However, for many companies that have subscription business models, conversion is only the beginning of a longer relationship with customers. For these companies, Customer Lifetime Value (CLV) is the most critical KPI of all.

CLV CHALLENGES IN A/B TESTING

Measuring CLV of an A/B test on your website can be a challenge for various reasons. The following are some examples:

- Using conversion rate as an indicator

A conversion rate increase does not always indicate an increase in CLV. A company may increase conversion by testing a discount off a new customer’s first month, but if these new customers are less likely to renew, it can actually hurt CLV.

- Testing period vs. CLV period

CLV can take months to accurately calculate. Among other things, companies must consider conversion rate, average order value, renewal rate (i.e. “stick rate”) and future upsells. It is rare and often impractical for a website A/B test to run for multiple months or years. Usually A/B tests last only a few weeks, which can make it difficult to determine the connection between the content tested on the site and it’s long-tail effect.

- Extreme sensitivity to slight inaccuracies

Many of the popular testing platforms use JavaScript to swap and deliver content. Because browser settings and connection speed can affect JavaScript, sometimes it doesn’t execute 100% as desired and you can have a small amount of un-wanted effects. Because CLV is affected by compounding factors such as renewals and upsells, it is extremely sensitive to un-desired testing effects caused by JavaScript weirdness or test setup mistakes.

TIPS TO CREATING A TEST BUILT FOR CLV MEASUREMENT

Working with a client that is seasoned in the discipline of direct marketing and testing, we used the following tactics to create A/B tests that make it possible to accurately measure the impact to CLV:

- Use unique IDs for each test experience.

To measure the CLV of an A/B test, tie conversions to renewals and upsells with a unique ID per test experience. For example, your website can assign a unique ID to experience A orders and a different one to experience B orders. These unique IDs should show up in your transaction data and also be passed to your customer tracking system. The IDs can be used to tie CLV back to specific test experiences and continue measuring test impact long after the test has been deactivated.

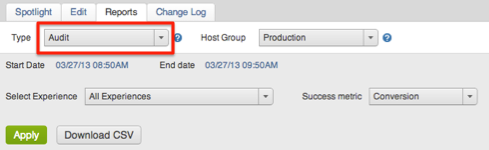

- Don’t trust summary reports. Analyze detailed results.

All testing platforms provide you with summary reports of how each test experience performs against the control. However, this type of reporting lacks the detail required to determine if you’re looking at clean results or to analyze based on the unique ID previously described. Some popular platforms provide detailed transaction data that contain stuff like products IDs or descriptions, number of products per order, revenue per order, transaction IDs and other fields that you can customize. With this level of detail you can review results carefully and identify any discrepancies that may inaccurately influence your CLV.

- Be creative and work around the tech imperfections.

When small data inaccuracies have a big impact on long-term ROI, a small amount of bad data is unacceptable. For some of our clients a .5% shift in conversion can have as much as a $20 million impact! Recently we were struggling with a testing platform inaccurately assigning unique IDs about 5% of the time. IDs from Experience A were being applied to orders in Experience B and vice versa. To solve this problem, we used Test&Target to split traffic and reload test pages with a unique campaign code per experience. The campaign code was then fed into a content management system that displayed the test experience associated the code. That unique ID was connected to users in the appropriate test experience and passed in order information. The result was the 5% inaccuracy being reduced to 0.1%.

IN SUMMARY

If your business decisions are made based on CLV, then that is the KPI that needs to be measured on any optimization efforts. Other KPIs like Conversion Rates and Revenue are good indicators, but ultimately they are only influencers to CLV.

Using unique IDs per experience and sharing them with your customer tracking systems, you can tie everything together and continue to review performance after your test period has ended. Analyzing detailed test transaction data can help you solidify your data integrity or un-cover any inaccuracies that may have otherwise led to poor decisions. And finally, being creative with your test setup and deployment can help you overcome imperfect out-of-the-box testing solutions.